Website under construction! Stay tuned!

This page is still under development. ARVP's website development team is working hard to provide the information you're looking for. We pride ourselves on making a beautiful and functional website and appreciate your patience.

In the meantime, check us out on Instagram! @uofa.arvp

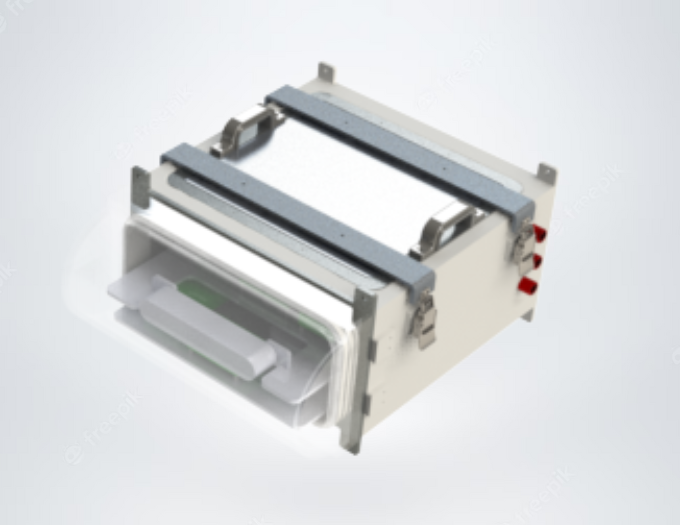

The hull houses all the electronics and batteries that make the robot function. Anything that is not waterproofed or can not be waterproofed is placed in the hull.

It has two access points, the top lid and front cap. Both access points are held in place by aluminum straps to maintain their o-ring seals. Any wiring that is routed outside the hull goes through a penetrator which is permanently potted with marine epoxy. The penetrators are affixed with o-rings to maintain a seal once installed. The front cap is clear acrylic to allow for our camera to see through it, and the top lid is machined aluminum.

This year we changed hulls from Auri to Arctos. Arctos has a rectangular hull made entirely out of aluminum, apart from the front cap, which is acrylic. The biggest improvement in the hull this year was the cooling system designed for the on board computer Nvidia Jetson Orin. Due to its high thermal output, the stock heatsink was not enough to cool the CPU when used in a sealed hull. To overcome this, we decided to use the aluminum hull’s inherent thermal properties when in water to dissipate the CPU heat. The Orin was mounted with the heat spreader directly in contact with the hull for maximum conductive heat transfer to the hull then into the water.

The dropper subsystem is used to drop two markers into the correct bins of the Bin task.

A 3D printed housing holds the droppers in place and a curved track holds the markers from the bottom of the housing. A servo rotates to slide the track which releases the markers one at a time. A 3D printed twist release cap on either housing allows for easy reloading of the markers.

This year we continued on making improvements to last year's dropper subsystem design to prepare for its first implementation at competition. Our main focus was in making the marker sink accurately as that was the biggest reason the dropper system didn’t see use at competition last year. To achieve this we did a full redesign on the marker itself, this included a custom machined aluminum nose to ensure that it was nose heavy. We also 3D printed the body with helical fins to self-balance due to the gyroscopic effect caused from the spin of the helical fins as the marker sinks.

The claw is used in two tasks throughout the competition. The first is to pick up the lids in the bin task. The second is to pick up the chevrons for the DHD task.

It has two mirrored grippers on a curved track to allow the claw to fully fold into the profile of Arctos’ frame when not in use and then extend past the frame when in use. To actuate this system, two servos actuate either gripper along the track. The gripper has a 3D printed pincer made from TPU flexible filament as the exterior shape and rigid pined ribs which allows the pincer to form around many shapes such as the chevron geometry.

The claw subsystem was completely redesigned this year with the goal of simplifying the mechanism while increasing the scope of use. After testing several concept actuation mechanisms, a curved track with mirrored grippers was chosen. The mirrored grippers were chosen due to their high range of motion which allowed the grippers to tuck away when not in use and it could be easily modified to adjust the path of the motion of the grippers in the future. 3D printed soft gripper pincers were designed in order to form around the unique geometry of the chevrons for reliable manipulation. This design also allows for easy modification of gripper structure for future iterations.

The torpedo subsystem is used to fire two torpedoes launched via compressed springs into targets on the torpedo task.

They are held in place with magnets and the springs are compressed with a sheet metal plate along guide rods for uniform release. A servo rotates to translate the release plate in either direction which releases the corresponding torpedo spring system one at a time.

The torpedo system is the only mechanical subsystem that we used in the last competition. Although it worked, it had many issues with reliability. This year's iteration of the torpedo system focused on fixing these issues. We completely redesigned the release mechanism to move away from a large lever arm that held the torpedo and springs together as this required the torpedo to have a high drag geometry in order to hold it with this mechanism. Instead, the torpedoes are 3D printed and have magnets placed within them during the print process to use for holding the torpedoes in place within the housing prior to launch. The springs are compressed in place with a separate sheet metal plate and two guide rods on either side of the torpedo for increased stability and reduced friction. The housing has been improved to be a smaller front profile with smoother geometry to reduce overall drag from the subsystem.